T3Chat Cloneathon Postmortem

- #javascript

- #markdown

- #vue

- #nuxt

T3-Chat Hackathon

I decided to participate in the T3Chat cloneathon for two main reasons:

- I was bored and looking for something to do

- Money is always nice Although I didn’t end up winning this experience still taught me a lot - both in software architecture and on Nuxt - and so I would like to share all of this in this article; enjoy!

You can find all the code for my submission here

Stack

Because I wanted to challenge myself - and because I wanted an excuse to learn more about this framework - I decided to built the whole thing using Nuxt. This would allow me to not only learn by reading the docs but also learn by building something with the framework - which is what I usually prefer.

There are some other elements to this project that made it all possible:

- Better Auth: to handle authentication

- Drizzle: the ORM I used

- Turso: the db provider I chose

- Zod: for validation

- Pusher: for realtime notifications

- Sockudo: the webserver I used locally If I had to justify them, here’s what I would say. When learning something new, I usually try to only have to learn that thing - meaning I will try and use (where possible, obviously) tools I already know so that I don’t have to split my attention too many ways. Amongst the tools I mentioned above, the only one I hadn’t used in a “production” project yet were better-auth and sockudo; both of them were a breeze to setup and use, so no complaints there.

Architecting the solution

The different sections

Let’s take a look at the objectives I had when building my submission:

- multiple providers and models (required)

- authentication and chat sync (required)

- syntax highlighting (nice to have)

- resumable stream (nice to have)

- bring your own key (nice to have)

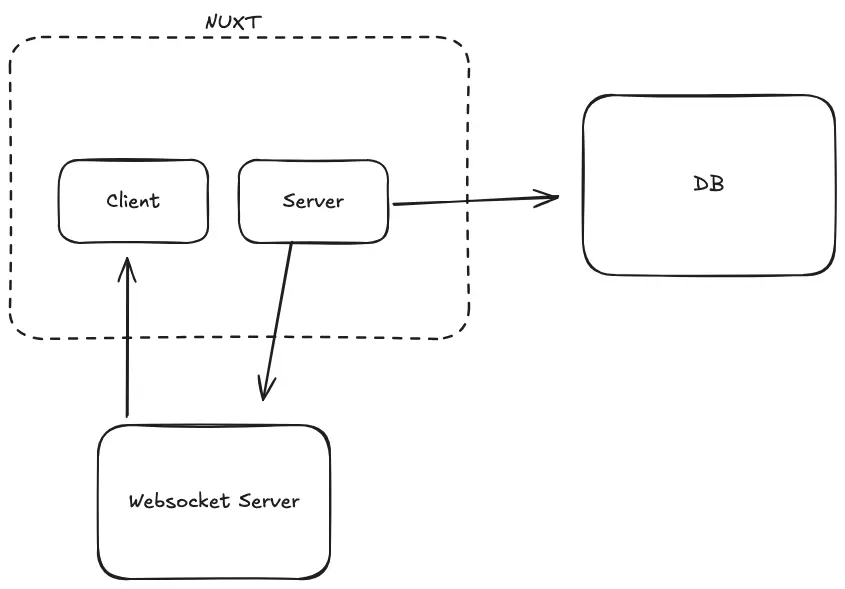

Based on all of these requirements, here’s what I came up with architecture-wise.

Let’s break this down; we have the two obvious sections that you would expect in this kind of scenario: the app and the database. But there was one issue that I couldn’t (or wouldn’t) solve with just these two elements; realtime and resumable streams. To achieve this I needed a way to decouple the data received by the client from the http request and response they would receive when hitting that endpoint - there needed to be a third service that would handle receiving data and transmitting it to the right user (or channel).

This ended up being a pusher-compatible service called sockudo in development and Pusher Channels in production.

What happens when a message is generated

When a message is generated a few things happen:

- the message sent by the user is stored in the database

- we fire off the request to the select provider with all the data

- we return the status to the user immediately

But I hear you asking:

but how can you return immediately if the provider is not done generating the response?

This is thanks to Nuxt’s waitUntil method that allows us to return earlier but continue executing in the background. This means the client can continue doing its thing (i.e. redirect to the chat page) while still doing it needs to do. This allows, in turn, to allow for resubscribing to the realtime events for a chat thread after navigation or a page reload.

Converting the markdown

Honestly, this section should have been easy. As you might have already read on this blog, I really like the unified ecosystem, so I though I’d just bring in my setup and call it a day. Unfortunately, there were some performance issues (probably due to the rate of change of the text) that caused me to spend way too long on this than initially planned. I even tried moving the whole rendering logic to a web worker but couldn’t manage to make it work in a timely manner. Fortunately that was a problem only when rendering new text, as when the new responses were done generating they were rendered to html and stored in the database - otherwise the app would have frozen for a lot longer than it ended up doing (silver lining, I guess?)

What I would do differently

Honestly, I had a lot of fun doing this cloneathon! It forced me to solve problems I don’t normally worry about in my day job - or my side projects for that matter - and to start learning new things. And because hindsight is always 20/20, I thought it might be interesting to recap some of the “lessons” I learnt while working on M3Chat

Too Much Too Young Too Fast

While I’m happy with the solution I came up with for having multiple providers and models, it was way too overkill and I spent way too much time on it. While this point might not stick if I were making a product I’d take to market, the solution I came up with was way too complex and caused some issues with type safety when crossing from client to server and from server to client. I could have definitely used the time to work on something else

The Writing on The Wall

The markdown rendering section is one of the things I’m less proud of in this project. While the markdown renders correctly - most of the time - it takes way too long on the client and causes the app to freeze during the parser initialization. I can see two ways in which I could have avoided this:

- moving the parser to a separate process - a separate web worker

- initializing the parser on app load, where the freezing might not have been as apparent If I had to do it again, I would definitely choose the first option

Separate Ways (Worlds Apart)

This point probably also stems from the time limit and - though I’m not using that as an excuse - I do hope I could have done this differently. When writing the code for generating the llm response I decided to put most of it - including broadcasting the data back to the user - inside the api handler. This is a bad design decision that I regret to this day, because it made it way harder than it should have been to change this logic. If I had to redo this in Nuxt, I’d probably use their event system to isolate all these different behaviors (you can read more about it here)